ANTICIPATED FUTURE AND THE DILEMMA OF INTELLIGENCE

ANTICIPATED FUTURE AND THE DILEMMA OF INTELLIGENCE

“Preparing for a radical restart and some kind of Biblical end-time?”

In 2017, I wrote an outlook with predictions on ‘our future’. Admittedly, not all forecasts were precise—some missed by years or got distorted by wars and the pandemic. Yet, as I stumbled upon the article last week, I was struck by a familiar realization: the course of anticipating technological progress and its potential futures are not only observable but seem predictable, from how progress seems to happen.

Motivated by this, I decided to revisit what I termed “anticipated futures” and explore “the dilemma of intelligence” anew, reflecting on humanity’s destiny in an emerging technological tsunami. The recent retirement of Boston Dynamics’ “Atlas” and the introduction of their next-generation humanoid – merging robotics with artificial intelligence – illustrate that the bounds of progress stretch endlessly ahead of us. We were once teased with flying cars, and then self-driving cars; perhaps the interim will soon introduce us to Tesla’s Bot Optimus, chauffeuring us toward the futuristic visions of Marty McFly and Doc Brown.

Over the years, the discourse around technological stagnation has grown louder. “Moore’s Law”—named after Intel founder Gordon Moore in 1965, who observed that the number of components on a chip doubles approximately every two years—suggested that processing power grows exponentially. Critics have proclaimed the death of Moore’s Law, deeming it unsustainable as technological demands escalate. Nvidia CEO Jensen Huang and many others have argued that it has become so resource intensive that Moore’s law is basically dead. However, the recent advancements at Nvidia challenge this notion, employing 3D components and novel materials to push boundaries further as at least from a resources standpoint today Nvidia has the capacity to invest in progress.

For decades, I have been inspired by the work of futurist Ray Kurzweil. His 1999 prediction in “The Age of Spiritual Machines” posited that by 2029, computers would achieve human-level artificial intelligence, capable of passing the Turing Test. At the time, this hypothesis was met with skepticism, dismissed as a century away, if possible at all. Yet today, the horizon for this milestone appears dramatically nearer—within the next year, even—as we grapple with refining large language models to manage their hallucinatory tendencies and intentionally tempering their intelligence in order not to be too good.

What does “the dilemma of intelligence” mean in this context?

The issue at the core of using “intelligence” in the term “Artificial Intelligence” lies in its profound and multi-faceted implications. We could also question the “artificial” part, but when we attribute human-like qualities to intelligence, we’re not merely referring to the ability to learn, understand, and apply knowledge. We’re also encompassing emotional, social, and creative capacities, among others. The label of “Artificial Intelligence” inherently elevates expectations for these systems, suggesting they could replicate human thought processes or even achieve a form of consciousness or sentience.

But is it intelligence though?

The term “Artificial Intelligence” was first coined at the Dartmouth Conference in 1956, marking the nascent stage of AI as a distinct field. This stood in contrast to “Cybernetics,” a term introduced by Norbert Wiener to describe the study of control and communication in animals and machines, focusing on feedback mechanisms and system regulation rather than mimicking human thought. The preference for AI over Cybernetics highlighted a shift towards creating machines capable of independent thought and decision-making, an ambition laden with both vast potential and ethical quandaries. This seminal choice anticipated a future where machines might not only augment human capacities but also challenge our very concepts of intelligence, consciousness, and life.

The selection of “Artificial Intelligence” over alternatives like “Cybernetics” marked a significant philosophical divergence in the development of computational technologies. It was a battle of allure more than logic, with “AI” proving to be a catchier acronym—I fancy it, after all these are my initials…

Seventy years after Wiener played with Cybernetics, this term seems a more apt description of what large language models have become, and yet, the journey of development is far from complete, even as we approach the limits of human-generated training data.

When today the discussion is around “thinking”, it is not the process of thinking in an Hegelian sense, but rather a computational process of sorting, processing, and analyzing data. For centuries, science and philosophy have grappled with understanding what ignites the spark of the arising of a thought, a debate that remains unresolved. If intelligence is not simply defined as processing data but defined as a human trait, then replicating it technologically also means replicating the human aspect. The ultimate implication is that we must be able to define and understand computer operations distinctly from human cognition—without being able to precisely explain the difference, replication would be theoretically possible. In short. If our brains are merely computational and part of a physical system, then theoretically, computers could replicate any human ability.

If artificial general intelligence (AGI) is to meet or exceed human capabilities, we will be faced with a paradoxical situation where the distinction between human and machine becomes undefined. If computers can replicate everything humans are capable of, then the line between what is naturally human and what is artificially created blurs. The ultimate implication is that we must be able to distinguish computer operations from human cognition—because without being able to explain the difference precisely, distinguishing between a replica and the original becomes impossible, a consideration that becomes particularly relevant when considering integration with AI. If an entity is conscious, we might never recognize it, a reality already present in interactions with advanced AI systems and in our understanding of consciousness in humans. Since we do not fully understand what constitutes consciousness, we cannot definitively ascertain it in other living beings today; if we can scientifically resolve what consciousness entails, it can theoretically be replicated. This argument holds not only for performing tasks or processing information but also for exhibiting a wide range of emotions, intuitive responses, and ethical reasoning, which could, at least in principle, theoretically be simulated or replicated in machines.

In such scenarios, emotional, social, and creative capacities can be simulated or artificially triggered, producing outputs identical to or superior to human outputs. If Artificial General Intelligence is to reach or exceed human capabilities, it will confront us with a paradoxical situation where the distinction between human and machine becomes indefinable. If technology can replicate the understanding of consciousness and the human mind, then we might face the dystopian prospect of becoming what I once termed “Homo Obsoletus,” a theme further explored in our forthcoming book, “The Singularity Paradox – Bridging the Gap between Humanity and AI co-authored together with Dr. Florian Neukart.

This raises ethical dilemmas and moral implications regarding the creation of entities that might surpass human intelligence and possibly possess consciousness, including debates about the rights of AI, the responsibilities of creators, and the global impacts of their decisions— aspects addressed in other publications.

Reflecting on the Greek myth, Narcissus is captivated by his own image. In what I describe as the ‘final narcissistic injury,’ the reflection remains, but there is no self left to recognize it—the ultimate loss of self-perception. But what would that mean? If there is no one to perceive, can we even argue the existence of a dystopia?

What follows such an argument is that we need an understanding of how an artificially intelligent and potentially conscious entity operates. From a philosophical and scientific standpoint, the unified challenge is to acknowledge that these entities differ from a human without knowing how. In principle, the question of what it means to be a Mensch must forever remain unanswered.

The path towards Singularity

Kurzweil’s forecasts of a ‘Technological Singularity by 2045’—a future where artificial intelligence surpasses human beings as the smartest entities on Earth—were once deemed fanciful, the stuff of science fiction, relegated to a distant tomorrow. Yet, as we edge closer to these dates, the years 2029 and 2045 no longer seem far off. In light of this, my conviction grows—setting aside the capitalist and economic opportunities—that mastering the art of “anticipating the future” is, if not the single most, certainly among the crucial competencies we need to cultivate to navigate what for many remains an unimaginable future.

Today, the pertinent question is not whether something is feasible, but rather what kind of future we desire. If anything conceivable is theoretically possible, then we shoulder a profound responsibility. Conversely, if limitations exist, we must grasp them fully to accurately predict potential futures and understand the realm of possibilities.

Can we, then, effectively anticipate future scenarios by examining the current and historical progression of technology, and extrapolating these trends into hypothetical futures? How can we improve our foresight of what might lie ahead?

A practical exercise might involve studying Kurzweil’s “law of accelerating returns.” By reflecting on this model, we can question and debate what we believe is the most plausible outcome.

Kurzweil argues that technological progress marches on at a nearly constant pace, undeterred by external disruptions such as wars or scientific breakthroughs. For over forty years, he has documented this phenomenon in his “Price-Performance of Computation” chart, tracing its lineage back to Konrad Zuse’s initial computer in 1939, which performed a mere 0.00007 calculations per dollar per second. In his latest update in 2023, Kurzweil notes that we’ve reached 35 billion calculations per dollar—a staggering increase of twenty quadrillion times over eighty years.

In ‘The Singularity Paradox’ – set to be released in early 2025 – we take a deeper look into the potential implications of such a technological singularity. This conversation is crucial as we approach critical junctures, where the future no longer looms as a distant specter but as an imminent reality with tangible and far-reaching consequences.

Consider Kurzweil’s graph for a moment. What do you believe?

I am particularly interested in scenarios where you envision no progress. Share your thoughts below.

Our outlook on the future can be characterized in at least three ways:

- We believe that progress will continue to accelerate exponentially, as demonstrated over the past 80 years.

- We believe that we have reached a plateau in progress; if so, what leads us to this conclusion?

- We believe that regression is possible, or that external forces could dismantle our technological advancements; what could drive such regression?

There are many strong claims about the limits of scientific breakthroughs and economic growth ceasing, thus stifling investment. However, it also appears equally plausible that this path of advancement could continue for some years, or at least it’s challenging to dismiss this possibility entirely.

This brings us to my point about anticipated futures. In today’s society, discussions often reach an impasse, as viewpoints are polarized and static, failing to appreciate the dynamic nature of the world. But the crux of the matter isn’t about who is right; it’s about understanding what your position means to you.

If you can categorically rule out a scenario of progress, then you can focus your strategies accordingly. Otherwise, anticipating various future scenarios becomes essential to prepare for possible outcomes. If you believe progress will continue, what does that imply? What potential business models might emerge? What implications could this have for humanity? Is this a future worth striving for and living in?

Turning back to 2017, what was once labeled as science fiction and speculative futurism has surprisingly transformed into realism—if we maintain a grip on reality, that is. Now, seven years later, many have changed their perspectives. Yet, the challenge of forecasting the future remains formidable. Consider, for example, Elon Musk’s departure from the board of directors at OpenAI, which Sam Altman attributed to the organization lagging in development. Two years following the so-called ‘intelligence explosion’ driven by Large Language Models (LLMs), we now find ourselves navigating a period marked by astonishment and disorder. Developments in AI have accelerated unexpectedly, causing the marginal costs associated with intelligence to plummet towards zero.

Kurzweil extends this idea of exponential progress beyond human language comprehension to encompass almost all technological events, suggesting that a more fitting term might be “Large Event Models” (LEMs). This revision highlights the broad applicability and impact of these developments.

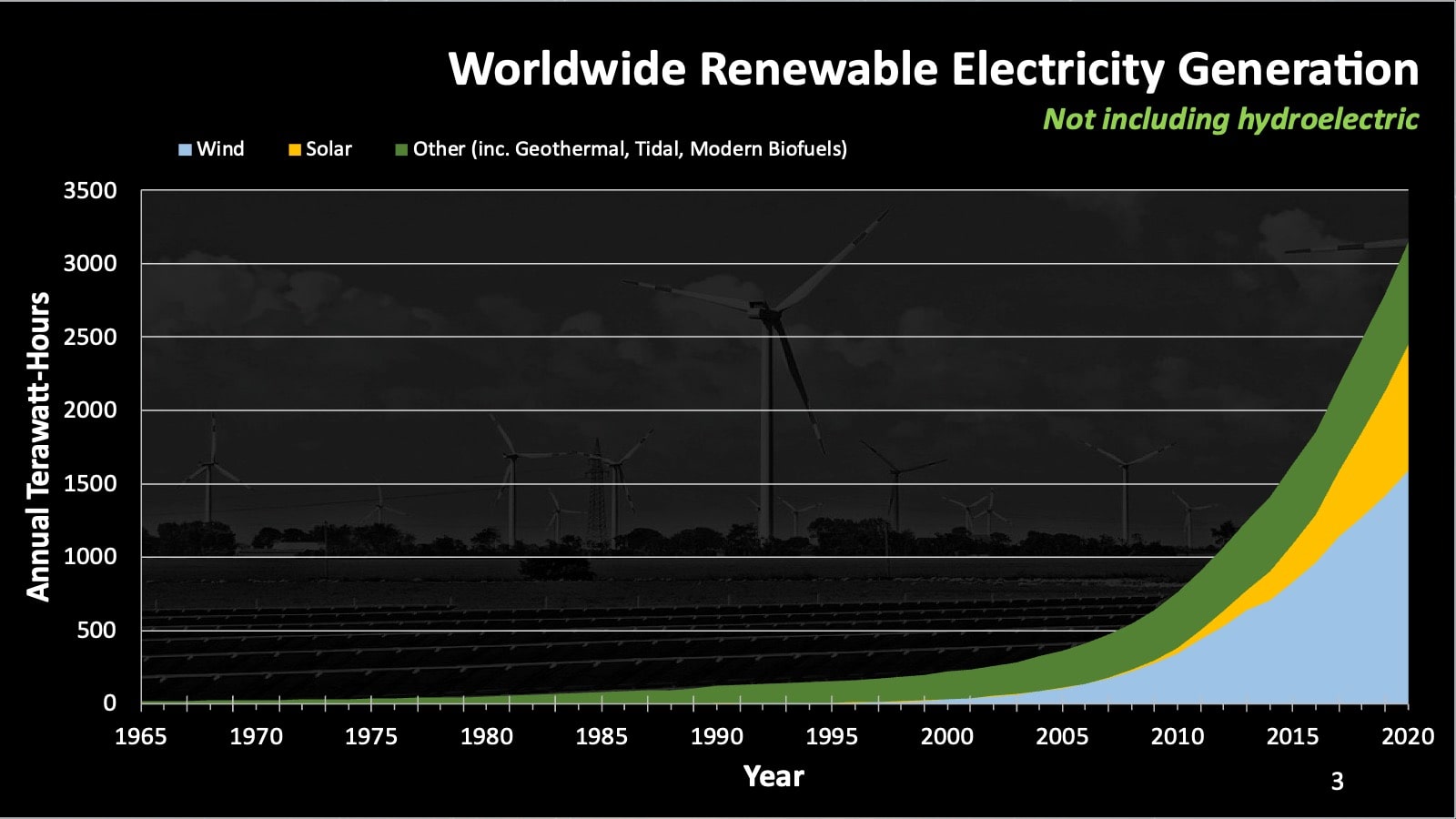

Consider the exponential growth in renewable energy as an illustrative example. The next 2-3 years will likely see a significant surge in demand due to electrification and increased computational needs. Predictions vary from a doubling to a threefold increase in demand. With such pressure, investment is sure to follow, spawning new business models and scientific advancements.

For years, I have maintained that the marginal costs of energy, much like those of intelligence, are poised to plummet to near zero within the next decade, propelled by relentless progress in solar and wind energy sectors. We do not have an energy problem, we have a storage and distribution issue. Although the journey may include its share of hype and subsequent disappointments, breakthroughs are likely inevitable and poised to disrupt the market, creating new opportunities.

This pattern resembles the exponential trends typically how technology progress seems to advance. Or do you see any reasons why this progress might suddenly come to a halt?

My prediction is clear. Fossil fuels won’t run out—they will simply become non-competitive.

Can we predict such a trajectory with certainty?

No. However, we can learn from leading corporations in these fields and understand technological advancements to better anticipate future developments. Current discussions around nuclear energy, additional coal, and ‘slow-tech’ seem less promising as pathways to follow.

Anticipating Futures: A Matter of Will and Possibility

There are numerous debates and examples surrounding our potential futures. Will efficiency in technology continue to improve? Can we enhance distribution systems? Are we capable of developing superior energy storage solutions? I believe our future can be decidedly positive, but this hinges on whether we are prepared to embrace the potential of exponential technologies, invest in human growth, and exert the effort required to build and execute new organizational and business models tailored to these anticipated future scenarios.

Ultimately, this reduces to a fundamental inquiry about what we deem possible. Do we believe in the possibility of progress? If not, what are the counterarguments, and what consequences do they entail? What actions should we then undertake? Conversely, if we believe progress is attainable, what prevents us from pursuing and constructing that future?

Some may choose to cling to a divine dimension, relying on a belief in deterministic progress as a comforting, albeit less taxing, way to understand the world. For others, developing a plausible and justified belief about the kind of future that can be created—and is worth striving for—is the more thrilling and rewarding path. I have opted for the latter, finding great excitement in this pursuit. It appears that today’s discourse about the future is less about science fiction and more about “sci-phi”—a blend of scientific inquiry and philosophical exploration aimed at anticipating potential futures and acting upon the paths we desire.

Today seems an apt moment to ‘look backward’ in order to ‘look forward’ and potentially gain a clearer perspective of ‘the now’.

In essence, the future is shaped more by our actions than by our limitations, as the quest for infinitely better explanations and progress appears to be an inherent force of nature, whether viewed from a realist or physicalist standpoint.

This raises a critical question for humanity, applicable even at the individual level: If any future could be built, what would you build? In other words, what future is worth striving for?

As we approach the technological singularity—where technological growth becomes uncontrollable and irreversible, radically altering human civilization—it becomes clear that predicting specific outcomes is impossible. This singularity suggests a future where AI and other technologies could profoundly change employment, governance, and social structures.

So what will it be? Will AI development lead us toward a utopian reality, a dystopian collapse, or a transformation of human existence that defies our current understanding?

Discussions on how humanity can navigate possibilities to secure a future that aligns with our aspirations are needed. In short: can we learn how to ‘anticipate future’?

As we witness rising anxiety and challenges to self-control, Germany’s legalization of cannabis, and increased pressure und large investments in deep-tech—underscoring the renewed mantra that “Cash is king”— as the landscape of startups and innovation is poised for dramatic shifts, my 2017 reflections show that we today can anticipate new futures as the coming years promise to deliver profound surprises for our species.

Transitioning from science fiction to “sci-phi,” – the 2024 blend of scientific inquiry and philosophical exploration – we must prepare for the future ethical, societal, and existential challenges that humanity will face through our ability to apply practical philosophy through our anticipated future. This preparation requires reforms in education, shifting from teaching what to think to how to think, significant policy changes to foster global interdependence and time set off to now take advantage of our super-powers given to us by our own advancements through technological progress. From that, we can develop robust ethical frameworks for further AI and quantum technological research and deployment.

As we celebrate the 300th birthday of Immanuel Kant, we are called to become the thinkers of our time. Philosophy will transform into a practice of thoughtful engagement, as Kant once envisioned, focusing on the humane aspect of our existence as Kant found it written in the bible, while at the same time antagonizing all of theology.

As a reference point this is a short abstract of my text back in 2017:

_____________________________

Originally posted 29. DEZEMBER 2017|IN ARTICLE, CONSCIOUSNESS, PHILOSOPHY, QUANTUM, SINGULARITY|BY ANDERS INDSET

“We are moving closer to what might be some kind of Biblical end-time and a radical restart for humanity lies before us.”

It is easy to track the progress in technology and make predictions about “IoT will go to market”, “AI will be important” “CryptoCurrencies are here to stay”, “AR/VR/MR will enter our life” “Bio- and Nano Technology will be all over”. But that is not it. Homo sapiens are preparing to wake up, as we move closer to some kind of radical restart of our society. It will not be a new chapter, rather more like a completely new story for our species. It is now up to us to come up with models and new concepts on how to avoid becoming homo obsoletus. Our focus switches from growth and expansion to defining the role which we (human beings) want to play in the exponential world. Here are some thoughts on an improvised future.

1. THE QUANTUM REVOLUTION – Changing our perception of the world

Be aware. The race is on. Financial Services, Energy, Automotive, it is a battle for the positions at the top because things will start shaking. Artificial Intelligence has already started to replace larger parts of our jobs, as we defined them, but until now it has been good news as the need for new jobs has been higher than the ones getting replaced. “Well isn’t it as it has always been – jobs go away and new ones are created? – One of the most asked questions in 2017.

NO! This time around it is different. Unlike previous revolutions (e.g. Agrar- and Industrial-) there is a fundamental difference. Change has always been about Authorities and about Technologies. This time, however, the authorities are moved into algorithms. The new rulers of the world are algorithms ran by a very small netocracy. Technologically speaking AGI (Artificial General Intelligence) will turn our world upside-down and an explosion of intelligence will lead us to a new perception on how we see the world.

Netscape 1.0. and the internet paved the way for the ups and downs of the “WEB” era. We “ended” that in 2006 and moved on to a ten year “Mobile/App” first era, this was harmless. In 2017 we started the next chapter, the AGI (Artificial General Intelligence) and Quantum Computing era, this is where the real change caused by technology will BEGIN. A quantum world will open our mind to a new dimension and a new era. An explosion of intelligence in a quantum world moving beyond the newtonian laws of physics.

2. HELLO ANXIETY – Complexity reveals itself

The “knowledge hype” is on, and with all the focus on (subjective) truths, we will in the upcoming years become even more obsessed with plausible explanations and justified true (subjective) beliefs as technology matures. The flip side of this will be that the complexity of the world will become transparent.

How little we really understand will be revealed. This will lead to frustrations. The dilemma, stated by the great Bertrand Russell, of the intelligent being anxious and the stupid more self-confident than ever, will influence our social and political systems more than ever. As the level of frustration rises, we need to find ways to cope with the permanent revolution and change. How can we learn how to expect the unexpected?

Whatever is thrown at us, we need to embrace it and create new models and questions.

3. THE ONLY VALUABLE OIL IS CANNABIS OIL

Some implications of the exponential world will be the breakthroughs in energy. Oil has recovered in 2017, due to some political restructuring and somewhat artificial control of supply/demand. The development is clear though, Crude Oil is not competitive anymore. We will of course never run out of this raw material, but it will be replaced. The only valuable oil in future is cannabis oil. A stock market entry might be too early now – but THC-Trading, here we go!

As the success stories reach mainstream media, the new “wonder medicine” will take the roll of paracetamol and penicillin, only this time it is a natural substance. A whole industry breaks down and will be forced to move out of old models and structures. The hemp can replace cotton and wood as materials for clothing and buildings. From drug to saviour as our resources are scarce. Our trees take 15 years or more to grow, the hemp only needs 90 days. Whereas 10 kg of cotton needs 18.000 Liters (approx 4755 gallons) of water, 10 kg of hemp only need 4000 Liters (approx 1057 gallons). Hemp will replace our plastic packing, it is used as oil, in paper, as medicine, in food, clothing and many more. In 2018 the main media will however focus on the progress on curing cancer and deceases such as Alzheimer and Multiple Sclerosis (MS) and the impact THC / Cannabis Oil has on these. Most definitely 2018 will be a year opening for THC / Cannabis Oil. It is now just up to us betting on when cannabis will exceed the trading course of crude oil.

4. THE END OF THE START-UP ERA

Successful business owners and politicians are still talking about “Digital Agenda” or “Digital Transformation” and “The Start-Up-Era” – but this will now be rock-dead. In case you haven’t noticed – the Klondike years of “start-ups” are over. What came as a “start-up” will now end as a “finish down”, as so many are still trying to build the new “Silicon Whathaveyou”. But I got news for you; it is not about silicon anymore as the start-up-hype comes to an end. And what about those simple new ideas? Forget your romance, ain’t gonna happen’! The problems to solve are hypercomplex and need huge amounts of data and thousands of the brightest minds working together with the most complex algorithms and can therefore only be solved by the large corporations.

Don’t get me wrong, it is still about creativity and creation, but there are no simple new ideas or eureka moments with shortcuts to fame and fortune. It is all about Entrepreneurship. We are back to the days of “Hard-Core-Entrepreneurship”. Trying Stuff – Leadership, Management and Execution – all world class – the recipe combined with some courage – boldness – risk to fail. As many will put their money on trying to build some new “Silicon-Copyhouses” those that succeed will get back to focusing on entrepreneurship. As of 2018 we enter the years of entrepreneurship.

5. THE MENSCH – THE RADICAL RESTART

It is a strange world. What we do know, however, We live in one society. There is now outside of society, we have to cope with this strangeness together. There are no borders anymore for knowledge, cash, terrorism, education. It is one interdependent world. With an enemy approaching from other galaxies, we would probably pull our shit together quickly and understand this, but the more realistic scenario is that we will have to learn the hard way. We are steering towards a radical restart. Of course this will be painful, but as we move closer to what we are and how things are related, we end up with some kind of symbiosis between our heart and mind and in positive terms, this should be in a peaceful state.

The road ahead is rocky and the challenges are plenty. We can not turn and surrender to technology for solutions, that would be negligent. Our brain is not an algorithm and if it was, the outcome would be clear. Eventually we would be replaced and become obsolete – Homo Obsoletus. Cracking the algorithm would mean no purpose, no void to fill. Luckily our brain is more like an orchestra, most likely without any conductor, yet we are all playing the same melody but with different facets and tones, and partly with different instruments. We need to continue to work on the melody. We need to create something that we want to live with. If it is a “God in Machine” (Home Deus or Deus Ex Machina) that we are working on, then we’d better make sure it is one we want to live with. One that is aligned with all our humanist goals. Even though it will not be bad, it must be within our new systems and models and aligned with the goals of our species. There is some kind of conscious revolution in the making. And it is desperately needed. It will not happen in 2018, but we need to start preparing as the Biblical end-time lies before us and a radical restart for all that it takes and is worth might be the only solution.

The future will be strange, as strange as we create it. You will still have your reality and I will have my reality as we seek to better understand the world in which we find ourselves. We continue our search for plausible explanations and the nature of reality, and together fine tune the melody of co-existence.

To a peaceful 2018

____________________________