Are we Living in a (Quantum) Simulation?

The God Experiment – Let there be Light!

Constraints, observations, and experiments on the simulation hypothesis

By Florian Neukart (Terra Quantum AG), Markus Pflitsch (Terra Quantum AG), Michael R. Perelshtein (Terra Quantum AG) and Anders Indset

The question “What is real?” can be traced back to the shadows in Plato’s cave. Two thousand years later, René Descartes lacked knowledge about arguing against an evil deceiver feeding us the illusion of sensation. Descartes’ epistemological concept later led to various theories of what our sensory experiences actually are. The concept of ”illusionism”, proposing that even the very conscious experience we have – our qualia – is an illusion, is not only a red-pill scenario found in the 1999 science fiction movie ”The Matrix” but is also a philosophical concept promoted by modern tinkers, most prominently by Daniel Dennett. He describes his argument against qualia as materialistic and scientific.

Reflection upon a possible simulation and our perceived reality was beautifully visualized in “The Matrix”, bringing the old ideas of Descartes to coffee houses around the world. Irish philosopher Bishop Berkeley was the father of what has later been coined as “subjective idealism”, basically stating that “what you perceive is real” (e.g., ”The Matrix” is real because its population perceives it). Berkeley then argued against Isaac Newton’s absolutism of space, time, and motion in 1721, ultimately leading to Ernst Mach and Albert Einstein’s respective views. Several neuroscientists have rejected Dennett’s perspective on the illusion of consciousness, and idealism is often dismissed as the notion that people want to pick and choose the tenets of reality. Even Einstein ended his life on a philosophical note, pondering the very foundations of reality.

With the advent of quantum technologies based on the control of individual fundamental particles, the question of whether our universe is a simulation isn’t just intriguing. Our ever-advancing understanding of fundamental physical processes will likely lead us to build quantum computers utilizing quantum effects for simulating nature quantum-mechanically in all complexity, as famously envisioned by Richard Feynman.

Finding an answer to the simulation question will potentially alter our very definition and understanding of life, reshape theories on the evolution and fate of the universe, and impact theology. No direct observations provide evidence in favor or against the simulation hypothesis, and experiments are needed to verify or refute it. In this paper, we outline several constraints on the limits of computability and predictability in/of the universe, which we then use to design experiments allowing for first conclusions as to whether we participate in a simulation chain. We elaborate on how the currently understood laws of physics in both complete and small-scale universe simulations prevent us from making predictions relating to the future states of a universe, as well as how every physically accurate simulation will increase in complexity and exhaust computational resources as global thermodynamic entropy increases.

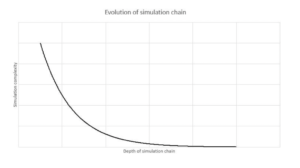

Eventually, in a simulation in which the computer simulating a universe is governed by the same physical laws as the simulation and is smaller than the universe it simulates, the exhaustion of computational resources will halt all simulations down the simulation chain unless an external programmer intervenes or isn’t limited by the simulation’s physical laws, which we may be able to observe. By creating a simulation chain and observing the evolution of simulation behavior throughout the hierarchy taking stock of statistical relevance, as well as comparing various least complex simulations under computability and predictability constraints, we can gain insight into whether our universe is part of a simulation chain.

I. INTRODUCTION

A dream within a dream from the ancient philosophical thinking of inception is nothing but today’s reflection of the simulation within a simulation. Over the past years, the question of reality has gained massive mainstream media attention as prominent figures like Elon Musk have stated there is a ”one in billions” chance we do not live in a simulation [1], and pop star astrophysicist Neil deGrasse Tyson has also jumped onto the idea stating that the probability is more than 50% [2]. In addition, philosopher David Chalmers has also caught on to the belief that we likely live in a simulation [3,4], pushing for further examination of the very notion.

However realistic or plausible such a hypothesis [5, 6] may be, how could modern physics and mathematics support seeking evidence for such a case? Scientists have criticized the hypothesis made by philosopher Bostrom for being pseudoscience [7, 8] as it sidesteps the current laws of physics and lacks a fundamental understanding of general relativity. Suppose an external programmer – an entity running a simulation and characterized as external to the simulation – could define the simulation’s physical laws. What would an external programmer and beings within the simulation be able to calculate based on their understanding of physical laws? Moreover, theoretically or practically, could beings in the simulation conceive and implement the apparatus or tools to verify that they aren’t participating in a simulation chain?

While controversial, the question of whether we exist in a simulation and thus participate in a simulation chain cannot be answered with certainty today. Nevertheless, it is intriguing, as an answer to it could lead us to question our very definitions of life and spirituality. Suppose we spark a chain of simulations, each hosting intelligent life intending to simulate the universe. Would we classify each of the simulated life forms as actual life? What if we could confidently state that we are part of a simulation chain and simulated beings ourselves? Would that change our definition of what counts as ”real” or ”artificial” life? In the argument made by Bostrom, one premise is worth examination: if there is a physical possibility of creating a simulation, then based on the state of development and the relation to time access, there would most likely be a higher probability of our residing within such a simulation than our being the exact generation building such a simulation. Experiments are needed to gain deeper insights, but several constraints prevent us from designing experiments that directly answer the question of whether an external programmer has created the universe and whether it’s only one of infinite hierarchical simulation chains. However, it is possible to indirectly test the simulation hypothesis under certain assumptions. The outlined experiments for doing so involve creating a simulation, potentially resulting in a chain of simulations, and conducting observations on the simulation behavior within the confines of a hierarchy until statistical relevance can be obtained. Potential observations of note could include the emergence of intelligent life and its behavior, a reversal of global entropy, compactification of dimensions, or the evolution of simulations along the simulation chain (all of which are, based on the current understanding of physics, impossible for us to conduct in our universe, but an external programmer shall not suffer from such limitations). Designing such experiments leads to the ultimate boundaries of computability and predictability. Physical and computational constraints prevent us from simulating a universe equal in complexity and size to our universe and from making accurate predictions of the future, whether or not the ”real” or simulated universes are based on the same physical laws.

Moreover, the cosmos has yet to be fully understood. For example, the universe’s fate and how to unite quantum physics and general relativity are deep and open questions. Today, quantum theory is widely understood as an incomplete theory, and new models may be discovered that will further flesh out our understanding of what quantum theory has indicated thus far. However, the state of modern physics and our imagination allows us to conceive experiments and build advanced technologies to continue scientific progress; therefore, the current framework shall not hold us back from searching for evidence related to the simulation hypothesis. The entrance point, however, must be the current understanding of mathematics and the challenges associated with our current knowledge of physics. Therefore, conducting experiments on such a hypothesis naturally requires assumptions to be made.

Also, many open questions remain in living systems theory, and we don’t yet know with certainty whether or not we are the only intelligent species in the universe. Still, we can conceive experiments that help us to gain insights into the ultimate questions: Were our universe and everything in it created, or did it emerge by itself? Is our universe unique, or is it just one of many, as described by the many-worlds interpretation of quantum physics [9]? In the article, we outline some fundamentals of computing and physics, which will help us define the experiment’s constraints. First, quantum physics is the essential pillar we build our experiments on – ergo, the current understanding of quantum mechanics – as our current understanding constitutes the most fundamental physics in the universe that everything else is based upon. Secondly, we briefly introduce different fates of the universe that the scientific community assumes to be scientifically sound and further guide us in designing an experiment independent of how the universe evolves. Thirdly, we consider the ultimate limits of computability, which also lead us back to quantum physics, both when it comes to engineering quantum computers and simulating physical and chemical processes in the universe. While Alan Turing showed what is computable [10], we indicate which computers are constructible within this universe. Finally, we explore different interpretations of observations gained from simulation chains and individual specimens we base the proposed experiments on. We also investigate observations in our universe, indicating whether we participate in a simulation chain.

II. THE SIMULATION HYPOTHESIS

The simulation hypothesis, first proposed by philosopher Nick Bostrom in 2003 [5, 6], is the consequence of an assumption in a thought model, which is sometimes also called ”the simulation argument” [3,5,6]. It consists of three alternatives to the real or simulated existence of developed civilizations, at least one of which is said to be true. According to the simulation hypothesis, most contemporary humans are simulations, not actual humans. The simulation hypothesis is distinguished from the simulation argument by allowing this single assumption. It is no more likely or less likely than the other two possibilities of the simulation argument. In a conceptual model in the form of an OR link, the following three basic possibilities of technically “immature” civilizations – like ours – are assumed. At least one of the above possibilities should be true. A mature or post-human civilization is defined as one that has the computing power and knowledge to simulate conscious, self-replicating beings at a high level of detail (possibly down to the molecular nanobot level). Immature civilizations do not have this ability. The three choices are [5]:

- Human civilization will likely die out before reaching a post-human stage. If this is true, then it almost certainly follows that human civilizations at our level of technological development will not reach a post-human level.

- The proportion of post-human civilizations interested in running simulations of their evolutionary histories, or variations thereof, is close to zero. If this is true, there is a high degree of convergence among technologically advanced civilizations. None of them contain individuals interested in running simulations of their ancestors (ancestor simulations).

- We most likely live in a computer simulation. If this is true, we almost certainly live in a simulation, and most people do. All three possibilities are similarly likely. If we don’t live in a simulation today, our descendants are less likely to run predecessor simulations. In other words, the belief that we may someday reach a posthuman level at which we run computer simulations is wrong unless we already live in a simulation today.

According to the simulation hypothesis, at least one of the three possibilities above is true. It is argued on the additional assumption that the first two possibilities do not occur, for example, that a considerable part of our civilization achieves technological maturity and, secondly, that a significant amount of civilization remains interested in using the resources to develop predecessor simulations. If this is true, the size of the previous simulations reaches astronomical numbers in a technologically mature civilization. This happens based on an extrapolation of the high computing power and its exponential growth, the possibility that billions of people with their computers can run previous simulations with countless simulated agents, as well as from technological progress with some adaptive artificial intelligence, what an advanced civilization possesses and uses, at least in part, for predecessor simulations. The consequence of the simulation of our existence follows from the assumption that the first two possibilities are incorrect. There are many more simulated people like us in this case than non-simulated ones. For every historical person, there are millions of simulated people. In other words, almost everyone at our experience level is likelier to live in simulations than outside of them [3]. The conclusion of the simulation hypothesis is described from the three basic possibilities and from the assumption that the first two possibilities are not true as the structure of the simulation argument. The simulation hypothesis that humans are simulations does not follow the simulation argument. Instead, the simulation argument shows all three possibilities mentioned side by side, one of which is true. But it remains to be seen what that is. It is also possible that the first assumption will come true, according to which all civilizations and, thus, humankind will die out for some reason. According to Bostrom, there is no evidence for or against accepting the simulation hypothesis that we are simulated beings, nor the correctness of the other two assumptions [5].

From a scientific standpoint, everything in our perceived reality could be coded out as the foundation of the scientific assumption that the laws of nature are governed by mathematical principles describing some physicality. The fact that an external programmer can control the laws of physics and even play with them has been deemed controversial in the simulation hypothesis. Something ”outside of the simulation” an external programmer – is, therefore, more of a sophisticated and modern view of the foundation of monotheistic religions/belief systems. Swedish techno-philosopher Alexander Bard proposed that the theory of creationism be moved to physics [11], and the development of super (digital) intelligence was the creation of god, turning the intentions of monotheism from the creator to the created. Moving from faith and philosophical contemplation towards progress in scientific explanation is what the advancement of quantum technology might propose.

The critics of Bostrom state that we do not know how to simulate human consciousness [12–14]. An interesting philosophical problem here is the testability of whether a simulated conscious being – or uploaded consciousness – would remain conscious. The reflection on a simulated super intelligence without perception of its perception was proposed as a thought experiment in the ”final narcissistic injury” (reference). Arguments against that include that with complexity, consciousness arises – it is an emergent phenomenon. A counterargument could easily be given that there seem to be numerous complex organs that seem unconscious, and also – despite reasoned statements by a former Google engineer [15] – that large amounts of information give birth to consciousness. With the rising awareness of the field, studies on quantum physical effects in the brain have also gained strong interest. Although rejected by many scientists, prominent thinkers such as Roger Penrose and Stuart Hameroff have proposed ideas around quantum properties in the brain [16]. Even though the argument has gained some recent experimental support [17], it is not directly relevant to the proposed experiments. A solution to a simulated consciousness still seems far away, even though it belongs to the seemingly easy problems of consciousness [18]. The hard problem of consciousness is why humans perceive to have phenomenal experiences at all [18]. Both don’t tackle the meta-problem of consciousness stating why we believe that is a problem, that we have an issue with the hard problem of consciousness.

German physicist Sabine Hossenfelder has argued against the simulation hypothesis, stating it assumes we can reproduce all observations not employing the physical laws that have been confirmed to high precision but a different underlying algorithm, which the external programmer is running [19]. Hossenfelder does not believe this was what Bostrom intended to do, but it is what he did. He implicitly claimed that it is easy to reproduce the foundations of physics with something else. We can approximate the laws we know with a machine, but if that is what nature worked, we could see the difference. Indeed, physicists have looked for signs that natural laws proceed step-by-step, like a computer code. But their search has come up empty-handed. It is possible to tell the difference because attempts to algorithmically reproduce natural laws are usually incompatible with the symmetries with Einstein’s Theories of Special and general relativity. Hossenfelder has stated that it doesn’t help if you say the simulation would run on a quantum computer: ”Quantum computers are special purpose machines. Nobody really knows how to put general relativity on a quantum computer” [19]. Hossenfelders criticism of Bostrom’s argument continues with the statement that for it to work, a civilization needs to be able to simulate a lot of conscious beings. And, assuming they would be conscious beings, they would again need to simulate many conscious beings. That means the information we think the universe contains would need to be compressed. Therefore, Bostrom has to assume that it is possible to ignore many of the details in parts of the universe no one is currently looking at and then fill them in case someone looks. So, again, there is a need to explain how this is supposed to work. Hossenfelder asks the question what kind of computer code can do that? What algorithm can identify conscious subsystems and their intentions and quickly fill in the information without producing an observable inconsistency? According to Hossenfelder, this is a much more critical problem than it seems Bostrom appreciates. She further states that one can’t generally ignore physical processes on a short distance and still get the large distances right. Climate models are examples of this – with the currently available computing power models with radii in the range of tens of kilometers can be computed [20]. We can’t ignore the physics below this scale, as the weather is a nonlinear system whose information from the short scales propagates to large scales. If short-distance physics can’t be computed, it has to be replaced with something else. Getting this right, even approximately, is difficult. The only reason climate scientists get this about right is that they have observations that they can use to check whether their approximations work. Assuming the external programmer only has one simulation, like in the simulation hypothesis, there is a catch, as the external programmer would have to make many assumptions about the reproducibility of physical laws using computing devices. Usually, proponents don’t explain how this is supposed to work. But finding alternative explanations that match all our observations to high precision is difficult. The simulation hypothesis, in its original form, therefore, isn’t a serious scientific argument. That doesn’t mean it is necessarily incorrect, but it requires a more solid experimental and logical basis instead of faith.

III. QUANTUM PHYSICS

As Richard Feynman famously said, if we intend to simulate nature, we have to do it quantum mechanically, as nature is not classical. While the transition dynamics from the microscopic to the macroscopic is not yet fully understood in every aspect, theory and experiments agree that macroscopic behavior can be derived from interactions at the quantum scale. Quantum physics underlies the workings of all fundamental particles; thus, it governs all physics and biology on larger scales. The quantum field theories of three out of four forces of nature, the weak nuclear force and the electromagnetic force [22,23], and the strong nuclear force [24] have been confirmed experimentally numerous times and have strongly contributed to the notion that quantum physics comprises, as of our current understanding, the most fundamental laws of nature. Immense efforts worldwide are underway to describe gravity quantum-mechanically [25–27], which has proven elusive. Gravity differs from the other interactions because it is caused by objects curving space-time around them instead of particle exchange. Uniting quantum physics with general relativity has proven to be one of the most formidable challenges in physics and our understanding of the universe [28,29]. Despite many scientific hurdles still to take, we have gained some insights into how the universe works. When we look at quantum physics as the ”machine language of the universe”, the universal interactions can be interpreted as higher-level programming languages. Quantum physics includes all phenomena and effects based on the observation that certain variables cannot assume any value but only fixed, discrete values. That also includes the wave-particle duality, the non-determination of physical processes, and their unavoidable influence by observation. Quantum physics includes all observations, theories, models, and concepts that go back to Max Planck’s quantum hypothesis, which became necessary around 1900 because classical physics reached its limits, for example, when describing light or the structure of matter. The differences between quantum physics and classical physics are particularly evident on the microscopic scale, for example, the structure of atoms and molecules, or in particularly pure systems, such as superconductivity and laser radiation. Even the chemical or physical properties of different substances, such as color, ferromagnetism, electrical conductivity, etc., can only be understood in terms of quantum physics. Theoretical quantum physics includes quantum mechanics, describing the behavior of quantum objects under the influence of fields, and quantum field theory, which treats the fields as quantum objects. The predictions of both theories agree extremely well with the experimental results, and macroscopic behavior can be derived from the smallest scale. If we define reality as what we can perceive, detect, and measure around us, then quantum physics is the fabric of reality. Therefore, an accurate simulation of the universe, or parts of it, must have quantum physics as a foundation. The internal states of a computer used for simulation must be able to accurately represent all external states, requiring a computer that uses quantum effects for computation and can accurately mimic the behavior of all quantum objects, including their interactions. The requirements for such a computer go beyond the quantum computers built today and envisioned for the future. Engineering such a computer is a formidable challenge, which will be discussed in the following chapters.

One of the arguments presented later in this article is the physical predictability constraint, which prevents us from building a computer that can be used to predict any future states of the universe through simulation. Would nature be purely classical a computer would not suffer from that constraint (there are others, though), but quantum physics imposes some restrictions, no matter how advanced our theories on how nature works become. Within the framework of classical mechanics, the trajectory of a particle can be calculated entirely from its location and velocity if the acting forces are known. The state of the particle can thus be described unequivocally by two quantities, which, in ideal measurements, can be measured with unequivocal results. Therefore, a separate treatment of the state and the measured variables or observables is not necessary for classical mechanics because the state determines the measured values and vice versa. However, nature shows quantum phenomena that these terms cannot describe. On the quantum scale, it is no longer possible to predict where and at what speed a particle will be detected. If, for example, a scattering experiment with a particle is repeated under precisely the same initial conditions, the same state must always be assumed for the particle after the scattering process, although it can hit different places on the screen. The state of the particle after the scattering process does not determine its flight direction. In general, there are states in quantum mechanics that do not allow the prediction of a single measurement result, even if the state is known exactly. Only probabilities can be assigned to the potentially measured values. Therefore, quantum mechanics treats quantities and states separately, and different concepts are used for these quantities than in classical mechanics.

In quantum mechanics, all measurable properties of a physical system are assigned mathematical objects, the so-called observables. Examples are the location of a particle, its momentum, its angular momentum, or its energy. For every observable, there is a set of special states in which the result of a measurement cannot scatter but is clearly fixed. Such a state is called the eigenstate of the observable, and the associated measurement result is one of the eigenvalues of the observable. Different measurement results are possible in all other states that are not an eigenstate of this observable. What is certain, however, is that one of the eigenvalues is determined during this measurement and that the system is then in the corresponding eigenstate of this observable. For determining which of the eigenvalues is to be expected for the second observable or – equivalently – in which state the system will be after this measurement, only a probability distribution can be given, which can be determined from the initial state. In general, different observables have different eigenstates. For a system assuming the eigenstate of one observable as its initial state, the measurement result of a second observable is indeterminate. The initial state is interpreted as a superposition of all possible eigenstates of the second observable. The proportion of a certain eigenstate is called its probability amplitude. The square of the absolute value of a probability amplitude indicates the probability of obtaining the corresponding eigenvalue of the second observable in a measurement at the initial state. In general, any quantum mechanical state can be represented as a superposition of different eigenstates of an observable. Different states only differ in which of these eigenstates contribute to the superposition and to what extent.

Only discrete eigenvalues are allowed for some observables, such as angular momentum. In the case of the particle location, on the other hand, the eigenvalues form a continuum. The probability amplitude for finding the particle at a specific location is therefore given in the form of a location-dependent function, the so-called wave function. The square of the absolute value of the wave function at a specific location indicates the spatial density of the probability of finding the particle there.

Not all quantum mechanical observables have a classical counterpart. An example is spin, which cannot be traced back to properties known from classical physics, such as charge, mass, location, or momentum. In quantum mechanics, the description of the temporal development of an isolated system is analogous to classical mechanics employing an equation of motion, the Schrodinger equation. By solving this differential¨ equation, one can calculate how the system’s wave function evolves (see Eq. 1).

| iℏ∂tψ=H | (1) |

In Eq. 1, the Hamilton operator H describes the energy of the quantum mechanical system. The Hamilton operator consists of a term for the kinetic energy of the particles in the system and a second term that describes the interactions between them in the case of several particles and the potential energy in the case of external fields, whereby the external fields can also be time-dependent. In contrast to Newtonian mechanics, interactions between different particles are not described as forces but as energy terms, similar to the methodology of classical Hamiltonian mechanics. Here, the electromagnetic interaction is particularly relevant in the typical applications to atoms, molecules, and solids.

The Schrödinger equation is a first-order partial differential equation in the time coordinate, so the time evolution of the quantum mechanical state of a closed system is entirely deterministic. If the Hamilton operator H of a system doesn’t itself depend on time, this system has stationary states, i.e., states that do not change over time. They are the eigenstates of the Hamilton operator H. Only in them does the system have a well-defined energy E, for example, the respective eigenvalue (see Eq. 2).

| Hψ=Eψ | (2) |

The Schrödinger equation then reduces to Eq. 3

| iℏ∂tψ=Eψ | (3) |

Quantum mechanics also describes how accurately we can measure and, thus, how accurately we can make predictions. Niels Bohr famously complained that predictions are hard, especially about the future. The uncertainty principle of quantum mechanics, which is known in the form of Heisenberg’s uncertainty principle, relates the smallest possible theoretically achievable uncertainty ranges of two measurands. It is valid for every pair of complementary observables, particularly for pairs of observables which, like position and momentum or angle of rotation and angular momentum, describe physical measurands, which in classical mechanics are called canonically conjugate and which can assume continuous values.

If one of these quantities has an exactly determined value for the system under consideration, then the value of the others is entirely undetermined. However, this extreme case is only of theoretical interest because no real measurement can be entirely exact. In fact, the final state of the measurement of the observable A is therefore not a pure eigenstate of the observable A, but a superposition of several of these states to a certain range of eigenvalues to A. If ∆A is used to denote the uncertainty range of A, mathematically defined by the so-called standard deviation, then the uncertainty range ∆B of the canonical conjugate observable B the inequality in Eq. 4 is valid.

| A∙B≥h4π=2 | (4) |

Another quantum-physical phenomenon is entanglement: a composite physical system, for example, a system with several particles, viewed as a whole, assumes a well-defined state without being able to assign a well-defined state to each subsystem. This phenomenon cannot exist in classical physics. There, composite systems are always separable. That is, each subsystem has a specific state at all times that determines its individual behavior, with the totality of the states of the individual subsystems and their interaction fully explaining the behavior of the overall system. In a quantum-physically entangled state of the system, on the other hand, the subsystems have several of their possible states next to each other, with each of these states of a subsystem being assigned a different state of the other subsystems. To explain the overall system’s behavior correctly, one must consider all these coexisting possibilities together. Nevertheless, when a measurement is carried out on each subsystem, it always shows only one of these possibilities, with the probability that this particular result occurs being determined by a probability distribution. Measurement results from several entangled subsystems are correlated with one another; that is, depending on the measurement result from one subsystem, there is a different probability distribution for the possible measurement results from the other subsystems.

There is a lot more to say about quantum physics and how it is different from the everyday macroscopic world that we perceive, but suffice to say, both entanglement and superposition already massively add to the complexities of a simulation of even small systems. A quantum computer is needed to conduct accurate quantum simulations, which will be introduced in the subsequent chapter.

IV. QUANTUM TECHNOLOGIES

To simulate nature accurately – quantum physically – classical computers will be overwhelmed no matter how powerful these become in the distant future [30–32]. The inherent complexity that inhabits the quantum scale, including an exponential increase in computational complexity with each additional interaction, can only be dealt with by a special quantum technology – a quantum computer. With the advent of quantum technologies that build upon the most fundamental physical laws of the universe, the question of whether the universe we inhabit and everything in it can be simulated, potentially on a quantum computer, does not seem so obscure anymore. The first quantum revolution, ushered in by the groundbreaking research and discoveries of the great physicists of the early 20th century, not only fertilized many of the exponential technological developments of the last few decades but made them possible [33,34]. The development of lasers has brought us fiber optic communication, laser printers, optical storage media, laser surgery, and photolithography in semiconductor manufacturing, among other things. Atomic clocks gave rise to the global positioning system (GPS), used for navigation and mapping, among other things, and transistors made modern computers possible. These and other technologies of the first quantum revolution are crucial for humanity and the economy. Much of the technology we take for granted in our everyday lives came to light during the first quantum revolution. The first quantum revolution was characterized by the development of technologies that take advantage of quantum effects; however, we have come to understand that these technologies are not exploiting the full potential of quantum physics. Spurred by immense advances in the detection and manipulation of single quantum objects, great strides are now being made in the development and commercialization of applications in quantum technology, such as quantum computing, communications, and sensors, deemed the second quantum revolution, in which the fundamental properties of quantum physics continue to be used. Particles can not only be in two states simultaneously, as is the case with the atoms in an atomic clock. Under certain conditions, two particles at a great distance from each other sense something about the state of the other – they influence each other, which is called entanglement and was already suspect to Albert Einstein. A particle’s precise position or state is unknown until a measurement is made. Instead, nature shows us that there are only probabilities of any given outcome, and measuring – looking – changes the situation irrevocably. In comparison, the first quantum revolution was about understanding how the world works on the tiny scales where quantum mechanics reigns. The second is about controlling individual quantum systems, such as single atoms [33,35]. Quantum-mechanical predictions based thereupon are used to measure previously unachieved precision. It is also possible to generate uncrackable codes that cannot be decrypted by any system, thereby forming the basis for a secure communication network [36–38]. In addition, quantum computers promise to solve some currently unsolvable problems, including the simulation of molecules and their interactions for developing drugs against diseases that cannot yet be cured or finding new materials [39,40]. Hybrid computer systems combining classical high-performance computing with quantum computers are already being used today to develop solutions in mobility, finance, energy, aerospace, and many other sectors [41–47]. All these developments are happening right now, and it is remarkable that many challenges are no longer strictly scientific but are now engineering in nature. For example, work is being done on the miniaturization of atomic clocks and the robustness of quantum bits, the information units of a quantum computer. In addition, there are already approaches to amplifying and forwarding quantum communication signals to make internet-based communication more secure than ever before [36–38].

The quantum computer, in which big hopes for simulating physics and chemistry quantum-physically lie, is the quantum technology to be discussed and analyzed in more detail when thinking about simulating the universe. There has been much debate about whether a sufficiently powerful and error-corrected quantum computer may be used to simulate the universe or parts of it. If by ”the universe” we are referring to the universe we inhabit, then even with quantum computers using billions of high-quality quantum bits, this will not be possible – both physical and computational constraints discussed in the following chapters prevent us from doing so. A quantum processor or quantum computer is a processor that uses the laws of quantum mechanics. In contrast to the classical computer, it does not work based on electrical but quantum mechanical states. The superposition principle – quantum mechanical coherence – is, for example, analogous to the coherence effects and, secondly, quantum entanglement, corresponding to classical correlation, albeit stronger-than-classical.

Studies and practical implementations already show that using these effects, specific computer science problems such as searching large databases and the factorization of large numbers can be solved more efficiently than with classical computers. In addition, quantum computers would make it possible to significantly reduce the calculation time for many mathematical and physical problems. Before discussing the simulation of parts of the universe utilizing a quantum computer and certain constraints, it is essential to understand the differences between how information is processed in classical and quantum computers. In a classical computer, all information is represented in bits. A bit is physically realized via a transistor, in which an electrical potential is either above or below a certain threshold.

In a quantum computer, too, information is usually represented in binary form. Here, one uses a physical system with two orthogonal base states of a complex two-dimensional space as it occurs in quantum mechanics. In Dirac notation, one basic state is represented by the quantum mechanical state vector |0⟩, the other by the state vector |1⟩. These quantum-mechanical two-level systems can be, for example, the spin vector of an electron pointing either “up” or “down”. Other implementations use the energy level in atoms or molecules or the direction of current flow in a toroidal superconductor. Often only two states are chosen from a larger Hilbert space of the physical system, for example, the two lowest energy eigenstates of a trapped ion. Such a quantum mechanical two-state system is called a quantum bit, in short, qubit. A property of quantum mechanical state vectors is that they can be a superposition of other states. A qubit does not have to be either |0⟩ or |1⟩, as is the case for the bits of the classical computer. Instead, the state of a qubit in the complex two-dimensional space mentioned above is given by |0⟩:=1 0 ; |1⟩:=0 1 . A superposition |ψ⟩ is then generally a complex linear combination of these orthonormal basis vectors (Eq. 5), with c0,c1 C.

| |⟩=c0|0⟩+c1|1⟩=c0 c1 | (5) |

| c02 + c12 = 1 | (6) |

As in coherent optics, any superposition states are allowed. The difference between classical and quantum-mechanical computing is analogous to that between incoherent and coherent optics. In the first case, intensities are added; in the second case, the field amplitudes are added directly, as in holography. For normalization, the squared amplitudes sum to unity (Eq. 6), and without loss of generality, c sub 0 can e real and non-negative. The qubit is usually read out by measuring an observable that is diagonal and non-degenerate in its basis {|0⟩,|1⟩}, e.g., A=|1⟩⟨1|. The probability of obtaining the value 0 as a result of this measurement in the |ψ⟩ state is P(0)=⟨⟩2=c02 and that of the result 1 corresponding to P(1)=⟨⟩2=1- P(0) = c12. This probabilistic behavior must not be interpreted so that the qubit is in the state 0 with a certain probability and the state 1 with another probability, while other states are not allowed. Such exclusive behavior could also be achieved with a classical computer that uses a random number generator to decide whether to continue calculating with 0 or 1 when superimposed states occur. In statistical physics, which in contrast to quantum mechanics is incoherent, such exclusive behavior is considered; however, in quantum computing, the coherent superposition of the different basis states, the relative phase between the different components of the superposition, and, in the course of the calculation, the interference between them is crucial. As with the classical computer, several qubits are combined into quantum registers. According to the laws of many-particle quantum mechanics, the state of a qubit register is then a state from a 2N-dimensional Hilbert space, the tensor product of the state spaces of the individual qubits. A possible basis of this vector space is the product basis over the basis 0 and 1. For a register of two qubits, one would get the basis 00. The state of the register can consequently be any superposition of these basis states, i.e., it has the form of Eq. 7.

| |⟩=i1,…,iNci1iN|i1i2iN⟩ | (7) |

ci1iN are arbitrary complex numbers, and i1i2iN∈0,1, whereas in classical computers, only the basis states appear. The states of a quantum register cannot always be composed from the states of independent qubits, Eq. 8 showing such an example state.

| |⟩=12|01⟩+|10⟩ | (8) |

The state in Eq. 8 and others cannot be decomposed into a product of a state for the first qubit and a state for the second qubit. Such a state is, therefore, also called entangled. Entanglement is one reason why quantum computers can be more efficient than classical computers. Quantum computers can solve certain problems exponentially faster than classical computers: N bits of information are required to represent the state of a classic N-bit register. However, the state of the quantum register is a vector from a 2N-dimensional vector space, so that 2N complex-valued coefficients are required for its representation. If N is large, the number 2N is much larger than N itself. The principle of superposition is often explained such that a quantum computer could simultaneously store all 2N numbers from 0 to 2N − 1 in a quantum register of N qubits, but this notion is misleading. Since a measurement made on the register always selects exactly one of the basis states, it can be shown using the Holevo theorem that the maximum accessible information content of an N-qubit register is exactly N bits, exactly like that of a classical N-bit register. However, it is correct that the principle of superposition allows parallelism in the calculations, which goes beyond what happens in a classical parallel computer. The main difference to the classical parallel computer is that the quantum parallelism enabled by the superposition principle can only be exploited through interference. For some problems, a greatly reduced running time can be achieved with quantum algorithms compared to classical methods. When it comes to complex computational tasks – and simulating the universe is, without doubt, very computationally intensive and complex – what can be computed with a quantum computer and classical computers is an interesting question. Since the way a quantum computer works is formally defined, the terms known from theoretical computer science, such as computability or complexity class, can also be transferred to a quantum computer. It turns out that the number of computable problems for a quantum computer is no greater than for a classical computer. That is, the Church-Turing thesis also applies to quantum computers. However, there is strong evidence that some problems can be solved exponentially faster with a quantum computer. The quantum computer thus represents a possible counterexample to the extended Church-Turing thesis. A classical computer can simulate a quantum computer since the action of the gates on the quantum register corresponds to matrix-vector multiplication. The classical computer now simply has to carry out all these multiplications to transfer the initial to the final state of the register. The consequence of this ability to simulate is that all problems that can be solved on a quantum computer can also be solved on a classical computer; however, it may take classical computers thousands of years, whereas a quantum computer may take seconds. Conversely, this means that problems like the halting problem cannot be solved even on quantum computers and implies that even a quantum computer is not a counterexample to the Church-Turing thesis. Within the framework of complexity theory, algorithmic problems are assigned to so-called complexity classes. The best-known and most important representatives are the classes P and NP. Here, P denotes those problems whose solution can be calculated deterministically in a polynomial running time to the input length. The problems for which there are solution algorithms that are non-deterministic polynomial lie in NP. For quantum computers, the complexity class BQP was defined, which contains those problems whose running time depends polynomially on the input length and whose error probability is less than 13. Non-determinism allows different possibilities to be tested at the same time. Since current classical computers run deterministically, non-determinism has to be simulated by executing the various possibilities one after the other, which can result in the loss of the polynomiality of the solution strategy. With these results and definitions in mind, it is now time to discuss the potential feasibility of simulating the universe or parts of it.

V. FATE OF THE UNIVERSE

A. The beginning of the universe

A simulation of the universe or parts of it requires accurately simulating its evolution from the beginning. In cosmology, the Big Bang that followed cosmic inflation is the starting point of the emergence of matter, space, and time. According to the standard cosmological model, the Big Bang happened about 13.8 × 109 years ago. ”Big Bang” does not refer to an explosion in an existing space but the co-emergence of matter, space, and time from a primordial singularity. This results formally by looking backward in time at the development of the expanding universe up to the point at which the matter and energy densities become infinite. Accordingly, shortly after the Big Bang, the universe’s density should have exceeded the Planck density. The general theory of relativity is insufficient to describe this state; however, a yet-to-be-developed theory of quantum gravity is expected to do so. Therefore, in today’s physics, there is no generally accepted description of the very early universe, the Big Bang itself, or the time before the Big Bang. Big Bang theories do not describe the Big Bang itself but the early universe’s temporal development after the Big Bang, from Planck time (about 10-43 seconds) after the Big Bang to about 300,000 to 400,000 years later, when stable atoms began to form, and the universe became transparent. The further evolution of the universe is not considered the area of the Big Bang. The Big Bang theories are based on two basic assumptions:

- The laws of nature are universal, so we can describe the universe using the laws of nature that apply near Earth today. To be able to describe the entire universe in each of its stages of development based on the laws of nature known to us, it is essential to assume that these laws of nature apply universally and constantly, independent of time. No observations of astronomy going back about 13.5×109 years – or paleogeology going back 4×109 years – challenge this assumption. From the assumed constancy and universality of the currently known laws of nature, it follows that we can describe the development of the universe as a whole using the general theory of relativity and the processes taking place there using the standard model of elementary particle physics. In the extreme case of high matter density and, at the same time, high spacetime curvature, the general theory of relativity and the quantum field theories on which the Standard Model is based are required for the description. However, the unification encounters fundamental difficulties such that, at present, the first few microseconds of the universe’s history cannot be consistently described.

- The universe looks the same at any place (but not all times) in all directions for considerable distances. The assumption of spatial homogeneity is called the Copernican principle and is extended to the cosmological principle by the assumption of isotropy. The cosmological principle states that the universe looks the same simultaneously at every point in space and in all directions for large distances, which is called spatial homogeneity. The assumption that it looks the same in every direction is called spatial isotropy. A look at the starry sky with the naked eye shows that the universe in the vicinity of the Earth is not homogeneous and isotropic because the distribution of the stars is irregular. On a larger scale, the stars form galaxies, partially forming galaxy clusters distributed in a honeycomb structure composed of filaments and voids. On an even larger scale, however, no structure is recognizable. This and the high degree of isotropy of the cosmic background radiation justify the cosmological principle’s description of the universe as a whole. If one applies the cosmological principle to the general theory of relativity, Einstein’s field equations are simplified to the Friedmann equations, which describe a homogeneous, isotropic universe. To solve the equations, one starts with the universe’s current state and traces the development backward in time. The exact solution depends, in particular, on the measured values of the Hubble constant and various density parameters that describe the mass and energy content of the universe. One then finds that the universe used to be smaller. At the same time, it was hotter and denser. Formally, the solution leads to a point in time when the value of the scale factor disappears, i.e., the universe has no expansion, and the temperature and density become infinitely large. This point in time is known as the “Big Bang”. It is a formal singularity of the solution of the Friedmann equations. However, this does not make any statement about the physical reality of such an initial singularity since the equations of classical physics only have a limited range of validity and are no longer applicable when quantum effects play a role, as is assumed in the very early, hot and dense universe. A theory of quantum gravity is required to describe the universe’s evolution at very early times.

B. The future of the universe

The brightest stars determine the brightness of galaxies. In our galaxy, there are 100 billion stars, 90 percent of which are smaller than the Sun, and 9 percent of those are about as large as our Sun and up to about 2.5 times more massive. Only one percent is much larger than the Sun. However, this one percent of the stars determine the galaxy’s brightness. The bigger a star is, the more wasteful it is with its nuclear fuel. The brightest stars only live tens of millions of years and explode at the end of their lives. In doing so, enrich the interstellar gas with heavy elements. Besides, the shock waves of the explosion compress the gas so that new stars can emerge. Due to this wasteful handling of nuclear fuel, however, there will, at some point, be no more gas available for new stars to form. Our Sun already contains 2 percent heavy elements as the proportion continues to increase, so in a period of no more than a thousand billion years from now, gas will no longer be available to form new stars. Our galaxy will then shine weakly by the smaller stars that live much longer than the Sun. The smallest of them will go out only after 1012–1013 years. However, these already glow so faintly that to our eyes – if there are still humans in their current form – the sky would look almost starless, as we would only recognize such faint stars nearby. In about 1013 years, the universe will slowly become dark. The universe then only consists of slowly dying white dwarfs, planets, pulsars – the 20-kilometer cores of giant stars with a density as in an atomic nucleus -, and black holes. Even though it is dark, the gravitational forces of the stars still exist. Stars are usually far apart. The Earth is eight light minutes away from the Sun, and the next star is 4.3 light-years. So, a very close encounter with another star, though unlikely, will be enough to throw Earth out of its orbit around the long burned-out Sun. In that scenario, the Earth wanders alone around the Milky Way. That an encounter with a massive partner is so close that it also affects the Sun is even more unlikely, but even such an event should have occurred once in 1019 years. The Sun either falls closer to the galactic center or is expelled from our galaxy. Stars not forced out of our galaxy will fall victim to Sagittarius A*, the black hole in the center of it. Currently, it has a mass of 4.31±0.38106 solar masses, but after 1024 years, all the stars remaining in the system have likely been swallowed. According to today’s model of the universe’s origin, we assume that it originated from a point, a singularity of all mass, and since then, it has continued to expand. Whether this will continue depends on how much matter is in the universe. The matter slows down the expansion by gravitational attraction. If there is enough matter in the universe, the expansion can eventually come to a halt and then turn around so that the universe ends in a singularity. The universe will continue expanding if the matter does not suffice for that scenario. The amount of material required for this is called critical density. The matter we can observe (stars, gas clouds) is not enough for this, but we suspect that there may still be matter that we cannot see, the so-called dark matter. This could be elementary particles, black holes, or prevented stars where the mass was not sufficient to ignite the nuclear fuel. There are indications of substantial amounts of dark matter since the mass in galaxy clusters is not enough to hold them together. According to the theory of relativity, space and time interact with matter and energy. The gravitation of matter in the universe slows down cosmic expansion. In the distant past, the expansion rate should have been greater. If the mean density of matter in the universe is above a specific “critical” value, then the expansion should even come to a halt at some point and turn into a contraction. This critical density is around two to three hydrogen atoms per cubic meter, corresponding approximately to the mass of a grain of sand distributed over the volume of the Earth. However, the observations show that the total mass of the visible matter – gas, stars, and dust – is at most enough to accommodate one percent of the critical density. As of our current understanding, the main mass of the universe consists of dark matter. Dark, because it only makes itself felt through the force of gravity in the movements of stars and galaxies, but it’s not observable. However, the density only comes to about 30 percent of the critical limit. Research into the galaxy clusters now speaks for this: their spatial distribution and dynamics, their temporal development based on observations and theoretical models, and the extent of gravitational lens effects – light deflection of distant galaxies by foreground objects. So, the universe’s average density is not enough to stop its expansion. Even so, theorists have long favored a critical density universe because this is the simplest solution that best fits the cosmic inflation hypothesis. Cosmic inflation describes that the universe should have expanded exponentially in the first fraction of a second after the Big Bang when it was even tinier than an atom – by a factor of 1030. The pattern of tiny temperature fluctuations in the cosmic background radiation also speaks for such a universe with critical density. It is the reverberation of the Big Bang and contains subtle information about the entire properties of the universe. The density Ωtot is given by Eq. 9.

| Ωtot=c | (9) |

where is the mean density, and c the critical density, which in turn is defined in Eq. 10.

| c=3H028πG≈8.5∙10-27kgm3 | (10) |

where H0 is the current Hubble parameter giving the universe’s rate of expansion, and G is the gravitational constant. Whether the mass is sufficient to stop the universe’s expansion is unknown today. The observable mass accounts for only 10-20 percent of the critical density.

- Critical density >1: In this case, the universe’s expansion will reverse, and the universe will collapse in the distant future. For an observer, the reversal of expansion would not be apparent at first. Only when there are only a billion years left before collapse do observers notice that the sky is getting lighter again. More and more galaxies will appear in the sky as the distances between the galaxies get smaller. Around this time, the galaxy clusters will also merge. About 100×106 years before the collapse, the galaxies will merge, and stars will only be found in a gaseous cloud. One million years before the collapse, the temperature in space will rise to room temperature. One hundred thousand years before the collapse, the night sky will be as bright as the Sun’s surface. The temperature in the universe will rise to such an extent that planets become liquid lumps. The further the matter is smushed together, the faster the black holes grow. Besides, neutron stars and dwarf stars can form new black holes by absorbing matter. Ten years before the collapse, even the black holes merge. The temperature in the universe is now 10×106 degrees. Finally, the universe is only a black hole, so it does not matter if it comes to collapse since, in a black hole, both time and space no longer exist. Otherwise, everything now runs backward, as in the Big Bang: new energy spontaneously forms new particles, the basic forces of nature separate, and everything ends in a great singularity.

- Critical density =0: The most boring case. If the density of matter has precisely the critical value, the expansion rate will increasingly approach zero in an infinite time. The conditions in this universe are similar to those in a universe with an omega of less than 1. However, an electron and a positron will circle each other at astronomical distances. These come closer and closer, eventually annihilating each other and leaving behind photons. The end is a shapeless desert of radiation and particles that is lost in eternity. If nothing happens, then the concept of time no longer makes sense, and time ceases to exist.

- Critical density <1: After 1014 years, hydrogen fusion to higher elements in the stars comes to a standstill. They slowly go out one by one. The universe now consists only of planets, dwarf stars, neutron stars, and black holes. In 1017 years, the radiation of light from residual gas will also slow down and hit the remaining stars. In 1026 years, galaxies emit gravitational radiation. The remaining stars are slowly spiraling into the galaxy center. If current theories about the universe’s origin apply, the proton would have to split with halftime of 1032–1036 years. Some theories that predict a decay time of fewer than 1034 years can already be excluded, as no proton decay could be observed so far. Therefore, it is impossible to say that the theories predicting a longer decay time are correct. If true, half of the remaining matter in the universe will have decomposed into positrons after 1036 years. Accordingly, all protons in space would have decayed after 1039 years at the latest. That would also be the end of all atoms, molecules, planets, and other celestial bodies since the atomic nuclei partially consist of protons. The universe would now only consist of light, electrons, positrons, and black holes. After 1064–1067 years, the black holes begin to evaporate slowly. The last and formerly biggest ones will end in an explosion after 10100 years. Whether black holes actually evaporate is, as of now, only a hypothesis. If the proton does not decay, after 101000 years, the dwarf stars will become neutron stars. After 101026–101074 years, the neutron stars will have evolved into black holes, which will then evaporate. After this time, the universe will consist of elementary particles only.

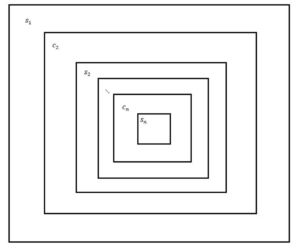

Fig. 1. Curvature of the universe depending on the density parameter [48]

Independent of the possible futures of the universe, according to human standards, it has taken a long time to reach the current state of the universe, and the evolution will continue for a long time before its thermodynamic death or collapse happens. Therefore, a simulation of the universe or parts of it running in real-time will be no good if we expect to learn whether the simulation behaves like the real universe. One would assume that, like in today’s computer simulations, time can be accelerated to gain insights more quickly. Still, it turns out that trying to simulate the universe exactly imposes some constraints on the simulation time, as is discussed in the following chapters.

VI. SIMULATION FROM WITHIN AND FROM WITHOUT

Before discussing the simulation of our universe, we must distinguish between a simulation of our current universe and an arbitrary universe. The question of whether it would be possible to predict the future given an exact simulation of the universe we inhabit is not only interesting to ask but important to answer, as it leads us towards the ultimate limit of computability, both in terms of physical law and complexity. We distinguish between the simulation of the universe and the simulation of a universe, the former referring to the exact simulation of the universe we inhabit (our universe), the latter referring to either part of the universe or an unspecific universe, which may or may not be based on the same physical laws as the universe. A couple of assumptions are made concerning our understanding of the evolution of the universe and everything in it, including astronomical objects such as planets, stars, nebulae, galaxies, and clusters, as well as dark energy, dark matter, the interstellar medium, the expansion of space-time, and the geometry of the universe, and the universal constants – the speed of light in vacuum, Planck’s constant, Boltzmann’s constant, and the gravitational constant, to name a few. For our today’s knowledge of the universe is far from complete, for the sake of the subsequently presented arguments, some assumptions are made:

- We (or an external programmer) completely understand the physical laws governing evolution and the universe’s composition down to level X. This includes a complete understanding of the universe’s cosmology, including all astronomical objects, the beginnings of the Big Bang and inflation to all matter – including dark matter -, dark energy, and all laws at the respective scale, such as relativity and quantum physics. A complete understanding of all physical laws allows us (or an external programmer) to appropriately model the universe’s evolution and simulate parts of it. It also allows life to emerge in the simulation. This assumption is not true today.

- The laws of quantum physics are the most fundamental physical laws down to level X. The constraints quantum physics imposes on certainty govern how accurately we can measure in our universe. The Planck units are the accessible limits of time, space, mass, and temperature beyond which no physical law has meaning. There are scientifically sound experiments and strong indications that this is true, but many open questions remain, for example, how to unite quantum physics with general relativity. Thus, this assumption is partially true today.

- An external programmer may decide to simulate a universe utilizing the same physical laws prevailing in their universe or base the simulation on different physical laws. If we act as an external programmer intending to find indications as to whether we participate in a simulation chain, it seems reasonable to base the simulation on the same physical laws prevailing in the universe. An external programmer to the universe also can’t know whether they participate in a simulation chain, and the assumption we make is that an external programmer would also want to find indications for whether it is true or not and would base a simulation of a universe on the same physical laws they observe in their universe. In fact, since we are pondering about simulating a universe, the question of whether the (our) universe is based on the same physical laws as a potential external programmer’s universe becomes irrelevant: if we act as external programmers and base our simulation on the same physical laws as our universe, and if a simulation chain emerges in which subsequent external programmers base their simulations on the same physical laws, too, then the actions of these external programmers are candidate observations to look for in the universe, no matter if the latter is based on the same physical laws of a potential external programmer’s universe.

Level X refers to the deepest physical level of structure of matter and the physical laws governing this level. As of today, we do not know with certainty what the deepest structure of matter is, so for now, we call it X. One of the hypotheses of string theory is that quarks – the constituents of hadrons, including protons and neutrons – and electrons are made up of even smaller vibrating loops of energy called strings and that these are the most fundamental elements all matter is made of. It will be essential to understand if X is also the level of granularity a ”sufficiently good” simulation needs to be based upon, as, for example, a macroscopic engine’s workings do not seem to be influenced too much by individual quark behavior.

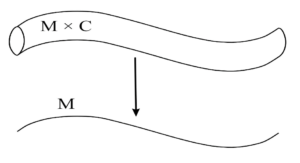

Also, a distinction needs to be made between simulation and emulation. On the outside, an emulation of the universe behaves exactly as the real universe, but the emulation’s internal states do not need to be identical to the real universe’s. For example, an emulation would not have to consider the same physical laws and behavior on unobserved scales, i.e., down to level X, as long as it behaves exactly as the universe on the desired observed scales. On the other hand, a simulation of the universe represents in its internal state all physical laws and states of the simulated constituents precisely as they are outside the simulation. Both the simulation and the emulation of the universe may produce convincing results. However, a simulation forms the basis for further discussion, as the intention is not only to mimic behavior but to reproduce parts of the universe, making the simulation physically indistinguishable from the real universe, both internally and externally.

A. Simulation of the universe

The feasibility of simulating the universe, specifically, the exact simulation of the universe we inhabit in full size and complexity, which would allow us to predict future events and simulate arbitrary states backward in time exactly, can logically be ruled out, even under the above assumptions. Several constraints on computability prevent the creation of such a simulation. If the intention is to simulate the universe exactly, the simulation must also include the computer used for the simulation, which we call the ”simulation from within”. The ”simulation from without”, on the other hand, is one in which the internal state of the computer used for the simulation of the universe is decoupled from the universe’s state.

- Every simulation takes discrete steps in time and will predict one time step after the other until the desired prediction time has been reached. Let us assume the simulation starts at time t0 with the expectation of simulating t1 to predict the state of the universe at t1, and the computing time tc to perform this is smaller than t1 (see Eq. 11).

| t0=t1–tc | (11) |

If the computer predicted the state of the universe at t1 in tc, it also predicted its internal state at t1. If the computer is asked at tc to predict the state of the universe at t2, it will base its predictions on the state of the universe, including its internal configuration at t1. However, tc<t1, so the computer would start to change its internal configuration to predict the universe’s state at t2 before t1 has been reached and to its original predictions of its internal state at t1 are not correct when t1 is reached. No matter how small the time steps are, as long as tc is smaller than t1, this problem arises, leading to the conclusion that a computer within the universe can’t be used to simulate the universe; it can only simulate a universe, according to our previously made definitions. The core of this argument is that a simulation of the universe can’t run faster than the universe’s real-time evolution and that the universe’s future can’t be predicted if the prediction should be exact. As the computer will always predict its own incorrect internal state as long as tc<t1. If tc=t1 and no other constraints are given, the simulation would produce correct results but run in real-time. From here on, we call this the computational predictability constraint.

- The first reason for the incomputability of the simulation from within is that – assuming it would be possible to run a simulation from within, including a correct representation of the internal state of the computer at any given point in time – every simulated computer would also have to simulate the universe including itself, which is a recursion. The recursion is not only computationally expensive, but it also results in general incomputability of the simulation, as the available computational resources, such as memory and processing units, have to be used to compute a set of simulations S={s1,…,sn} where n→∞. Instead of the computational resources needed for simulating the universe within, each simulated computer needs the computational resources to simulate itself and the universe, meaning that the computer in s1 is required to simulate the universe and itself from within, and infinitely many times from without, which is incomputable and thus impossible. Either the computer in s1 would run out of resources at some point, or the computational resources in sn would not suffice to conduct another simulation sn+1. From here on, this argument will be referred to as the first computability constraint.

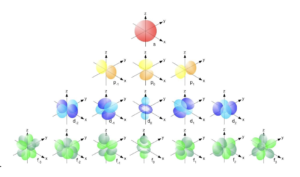

- Yet another reason for the incomputability of the simulation from within is the universe’s complexity. We are used to computers providing us access to virtual worlds via virtual reality devices or screens, and the virtual worlds of video games and the metaverse have become increasingly complex even though the information processing happens on purely classical computers that do not even have to be enormously powerful. Algorithms can be used to generate environments and mimic infinity randomly and dynamically. This is misleading though, when it comes to the simulation of the universe from within, as a computer running such a simulation would have to represent all particles of the universe, which we currently assume 1078–1082, in its internal state. A fundamental question is whether the encoding object can be of simpler nature than the encoded object. Today’s most advanced quantum computers use quantum objects to encode states of other systems but are limited to binary states. For example, such a quantum computer may use the energy levels of atoms to encode binary states – the ground state to encode 0 and an excited state to encode 1. A bit, no matter if it’s a quantum bit or a classical bit, is the smallest unit of information, and a physical system able to hold the information of one bit is the most fundamental system for storing information. How many bits are needed to completely describe an atom? An atom itself is a lot more complex than a two-state system. For example, electrons are found in probability clouds around atomic nuclei, called orbitals (Fig. 2).

Fig. 2. Some electronic orbitals. An orbital is a probability cloud determining the position of an electron within an atom [49].

Each electron in an atom is described by four distinct quantum numbers, providing information on the energy level or the distance of the electron from the nucleus, the shape of the orbitals, the number and orientation of the orbitals, and the direction of the electronic spin. These four quantum numbers contain complete information about the trajectories and the movement of each electron within an atom. All quantum numbers of all electrons combined are described by a wave function corresponding to the Schrödinger equation. The Schrödinger equation is simple to understand, but even the most powerful quantum computers of the future will need many more atoms to encode the states of a system than the system in simulation consists of. The hydrogen atom, for example, consists of an electron and a proton. Both particles move around a common center of mass, and this internal motion is equivalent to the motion of a single particle with reduced mass. r is the vector specifying the location of the reduced particle’s electron relative to its proton’s position. The orientation of the vector pointing from the proton to the electron gives the direction of r, and its length is the distance between the two. In today’s simulations, approximations are made, for example, that the reduced mass is equal to the electron’s mass and that the proton is located at the center of the mass. Eq. 12 is the Schrödinger equation for the hydrogen atom, with E giving the system’s energy.

| Hr,θ,ϕψ(r,θ,ϕ)=Eψ(r,θ,ϕ) | (12) |

The time evolution of a quantum state is unitary, and a unitary transformation can be seen as a rotation in Hilbert space. Evolution happens via a special self-adjunct operator called the Hamiltonian H of the system. The equation is given in spherical coordinates, with r determining the radius, and 0≤<2 being the azimuth and 0≤ the polar angle. The time-independent Schrödinger equation for an electron around a proton in spherical coordinates is then given by Eq. 13.

| –22μr2∂rr2∂r+1sin ∂θsin(θ∂θ+1 22 –e24π0rr,θ,ϕ=Eψ(r,θ,ϕ) | (13) |

In Eq. 13, is the reduced Planck’s constant, the term in the squared brackets describes the kinetic energy, and the term subtracted from the kinetic energy is the Coulomb potential energy. (r,,) is the wave function of the particle, 0 the permittivity of free space, and is the two-body reduced mass of the hydrogen nucleus of mass mp and the electron of mass mq (Eq. 14).

| μ=mqmpmq+mp | (14) |

It is possible to separate the variables in Eq. 13 since the angular momentum operator does not involve the radial variable r, which can be done utilizing a product wave function. A good choice is Eq. 15, because the eigenfunctions of the angular momentum operator are spherical harmonic functions Y(θ,ϕ) [50,51].

| ψ(r,θ,ϕ)=R(r)Y(θ,ϕ) | (15) |

The radial function R(r) describes the distance of the electron from the proton, and Y(,) provide information about the position in the orbital, and a solution to both with En depends only on the primary quantum number n (Eq. 16).

| En=mee48e02h2n2 | (16) |

The atom’s wave functions (r,,) are the atomic orbitals discussed before, and each of the orbitals describes one electron in an atom. Considering only distinct orbitals in an atom, say from 1s up to 5g, the system used for encoding these would have to be able to use 6.8 bits, resulting from the base 2 logarithm of 110 (Eq. 17). According to quantum theory, there is an infinite number of combinations of these four quantum numbers per atom, which would require the encoding system to use an infinite number of encoding systems. Also, as of today, the Schrödinger equation can only be solved for the simplest systems, such as hydrogen-like atoms. This limitation is not a constraint by computers, as also quantum computers will not be able to solve the Schrödinger equation analytically for big systems. However, quantum computers will provide a speedup when solving it numerically. Therefore, the assumption seems reasonable that to encode the full complexity of a quantum system, an identical quantum system is needed.

| 110=6.8 | (17) |